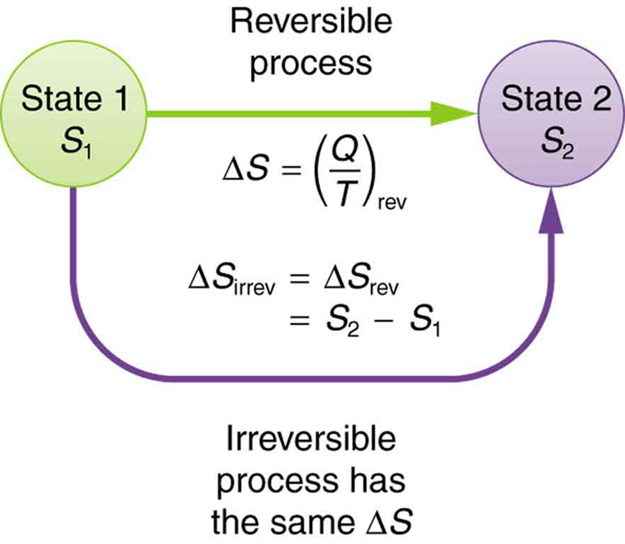

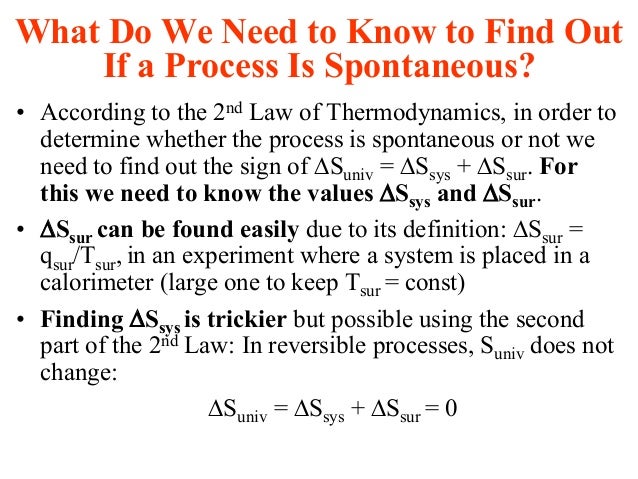

The more the delta Q per unit temperature the more is entropy. broadly : the degree of disorder or uncertainty in a system 2 a : the degradation of the matter and energy in the universe to an ultimate state of inert uniformity Entropy is the general trend of the universe toward death and disorder. So, in a completely rational set of units, entropy is just a pure number and has no units. So, S, the entropy of the system is a measure of how disordered the system is. Larger the values of S the larger the disorder. The greater is W, the greater is S, more disorder. entropy, the measure of a system’s thermal energy per unit temperature that is unavailable for doing useful work. When heat is removed, the entropy decreases, when heat is added the entropy increases. The fundamental equation of entropy S is S= k ln W, where W is the number of ways of arranging the particles so as to produce a given state, and k is Boltzmann’s constant. The increase in entropy of a system, dS, is given by dS = delta Q/T.

Units of entropy how to#

4.1 How to understand Shannon’s information entropy Entropy measures the degree of our lack of information about a system. We have changed their notation to avoid confusion. Unfortunately, in the information theory, the symbol for entropy is Hand the constant k B is absent. While the average KE energy of a molecule is the same for all gases and depends only on temperature, The KE per unit mass per unit kelvin is what you measure as specific heat and express it as KJ/kg/k or J/kg/-k. This expression is called Shannon Entropy or Information Entropy. So, we can redefine specific heat as a measure of Joule or kinetic energy. The SI unit for the Boltzmann constant is J/k. Heat received by a molecule is equally divided and each mode of motion, called degree of freedom like translation, vibration, and rotation gets ½ KT energy. A molecule can take only that much heat as it can store. Base 2 gives the unit of bits (or 'shannons'), while base e gives 'natural units' nat, and base 10 gives units of 'dits', 'bans', or 'hartleys'. This energy per unit mass of the molecule is its specific heat. So binary logs give bits, natural logs give nats, and. And when the logs are taken base 10, the result is in units of dits. When logs are taken base e, the result is in units of nats. In chemistry, entropy units can be utilised to represent enthalpy changes. These days entropy is almost always measured in units of bits, i.e. When you heat a molecule let us say a gas molecule, it takes certain energy before its temperature can rise. Unit of Entropy: The entropy unit seems to be a non-SI measurement for thermodynamic entropy that seems to be equivalent to one calorie per kelvin per mole, or approximately 4.184 joules per kelvin per mole. It equals to the total entropy (S) divided by the total mass (m). Entropy is a measure of the system's thermal energy unavailable for work per unit temperature.Įntropy is expressed as dS = delta Q/T. The specific entropy (s) of a substance is its entropy per unit mass.

The SI unit of heat capacity is joule per kelvin (J/K).Įntropy is a measure of the number of ways a system can be arranged, often taken to be a measure of "disorder" (the higher the entropy, the higher the disorder). Heat capacity or thermal capacity is a physical property of matter, defined as the amount of heat to be supplied to an object to produce a unit change in its temperature. The SI unit of specific heat capacity is joule per kelvin per kilogram, J⋅kg−1⋅K−1

which reduces the system performance.The specific heat capacity (symbol cp) of a substance is the heat capacity of a sample of the substance divided by the mass of the sample. In the same article in which he introduced the name entropy, Clausius gives the expression for the entropy production for a cyclical process in a closed system, which he denotes by N, in equation (71) which reads In 1865 Rudolf Clausius expanded his previous work from 1854 on the concept of "unkompensierte Verwandlungen" (uncompensated transformations), which, in our modern nomenclature, would be called the entropy production. The importance of avoiding irreversible processes (hence reducing the entropy production) was recognized as early as 1824 by Carnot. Development of entropy in a thermodynamic systemĮntropy production (or generation) is the amount of entropy which is produced during heat process to evaluate the efficiency of the process.Įntropy is produced in irreversible processes.

0 kommentar(er)

0 kommentar(er)